Syntax in the Brain

Probing the brain's code for syntax, the abstract structure of language, music, and reasoning.

My research focuses on how the brain translates from thought to language, using tools like ECoG, eyetracking, and syntactic priming. ECoG is the recording of electrical activity directly from the surface of the brain in awake human neurosurgical patients. It offers unparalleled precision, allowing us to know exactly where and when things are happening in the brain.

Probing the brain's code for syntax, the abstract structure of language, music, and reasoning.

How do speakers of Nungon, an endangered language of Papua New Guinea, speak their mind-bogglingly complex language?

Using ECoG and machine learning to detect what people are say before they say it.

Quixotic pronouns shed light on the relationship between speech production and comprehension.

Language learners spontaneously produce structures they've never been exposed to!

Testing a 50-year-old assumption: that the That-Trace Effect and Island Boundary-Gap Effect are the same.

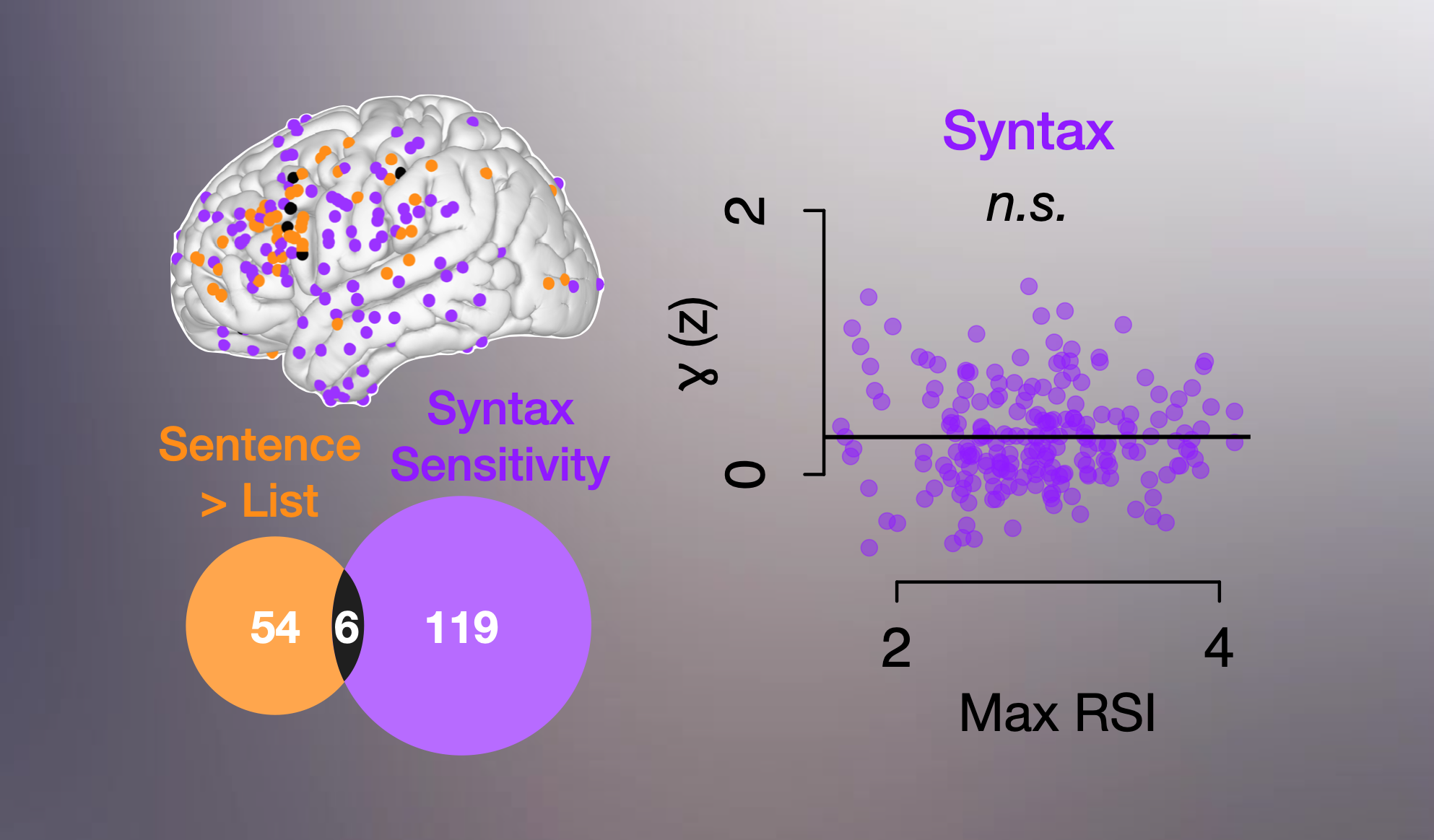

Syntax, the abstract structure of human language, is the evolutionary adaptation that sets our species' cognition apart. This project uses state-of-the-art machine learning and electrocorticography (ECoG, or measures of brain activity taken directly from the surface of the brain in awake neurosurgical patients) to understand how the brain encodes this central aspect of human cognition. Our data reveal an unexpected pattern: that syntax, unlike other types of information, does not elevate neural activity when it is processed.

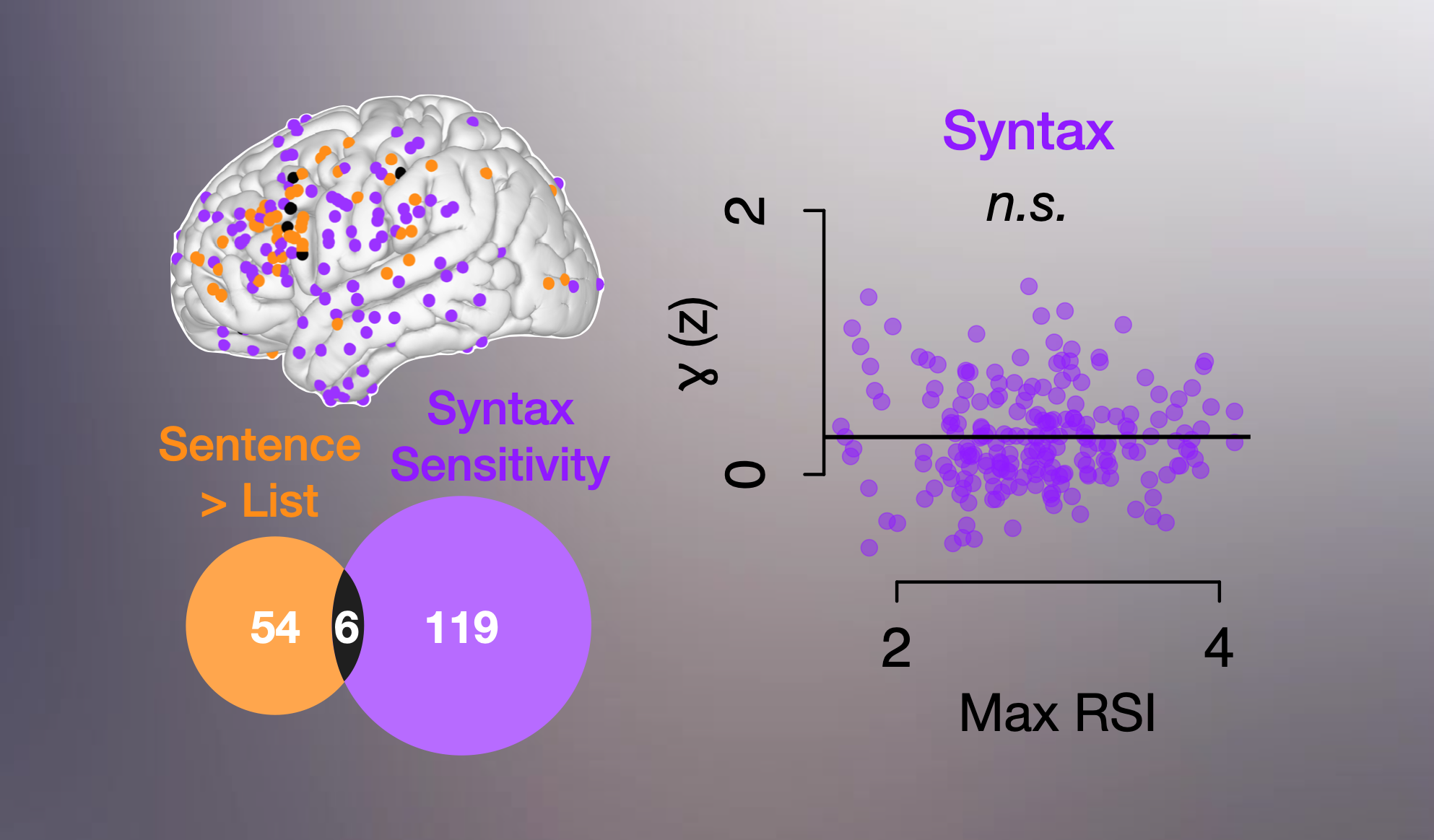

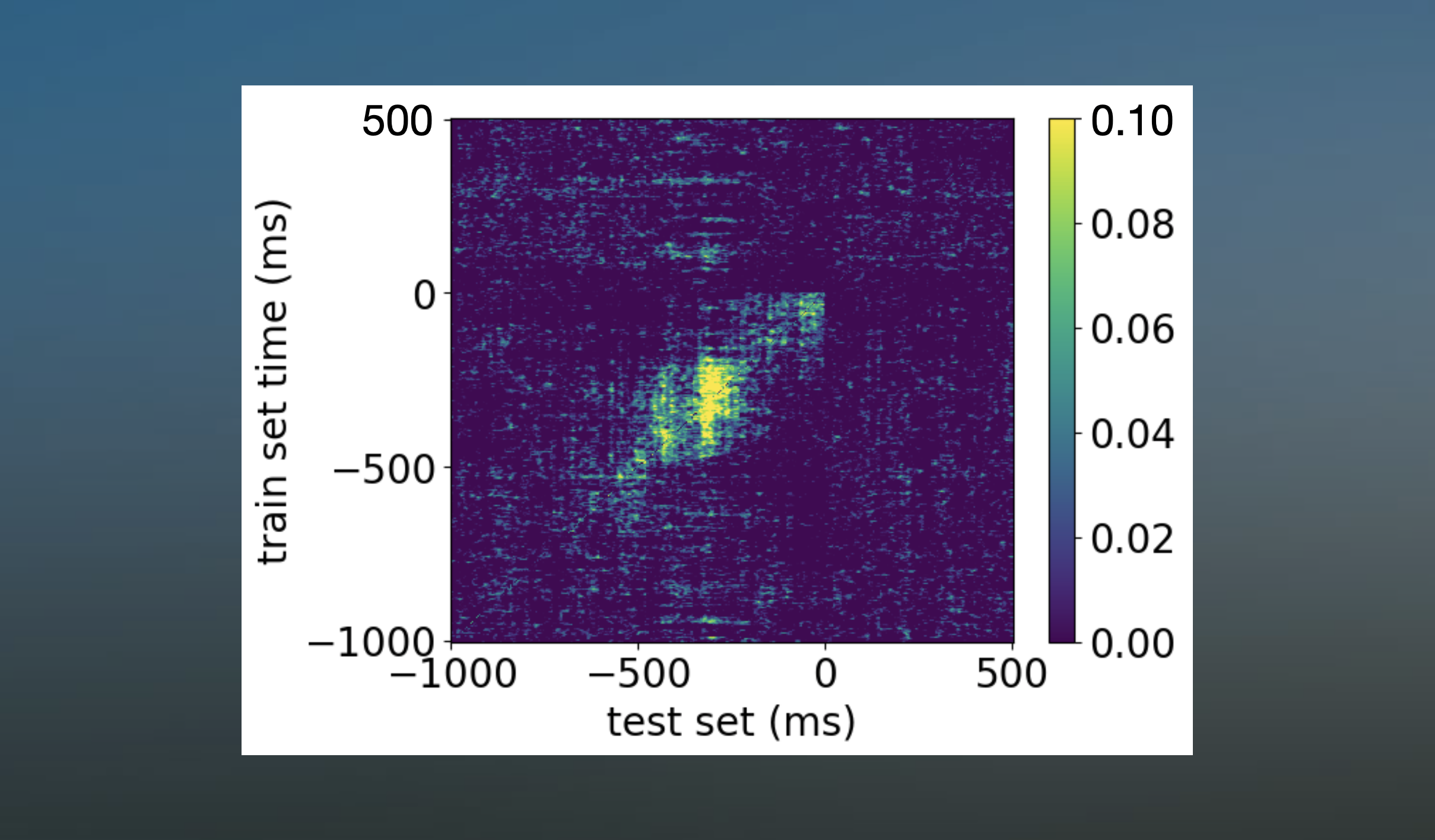

The human brain has 10s of 1000s of words stored in it somewhere. This project uses state of the art machine learning and ECoG to predict what speakers are going to say up to a second before they begin speaking. We visualize the different stages of representation that words pass through (conceptual, phonemic, articulatory) as the brain accesses and prepares to say words, both individually and in sentences.

The human brain has 10s of 1000s of words stored in it somewhere. This project uses state of the art machine learning and ECoG to predict what speakers are going to say up to a second before they begin speaking. We visualize the different stages of representation that words pass through (conceptual, phonemic, articulatory) as the brain accesses and prepares to say words, both individually and in sentences.

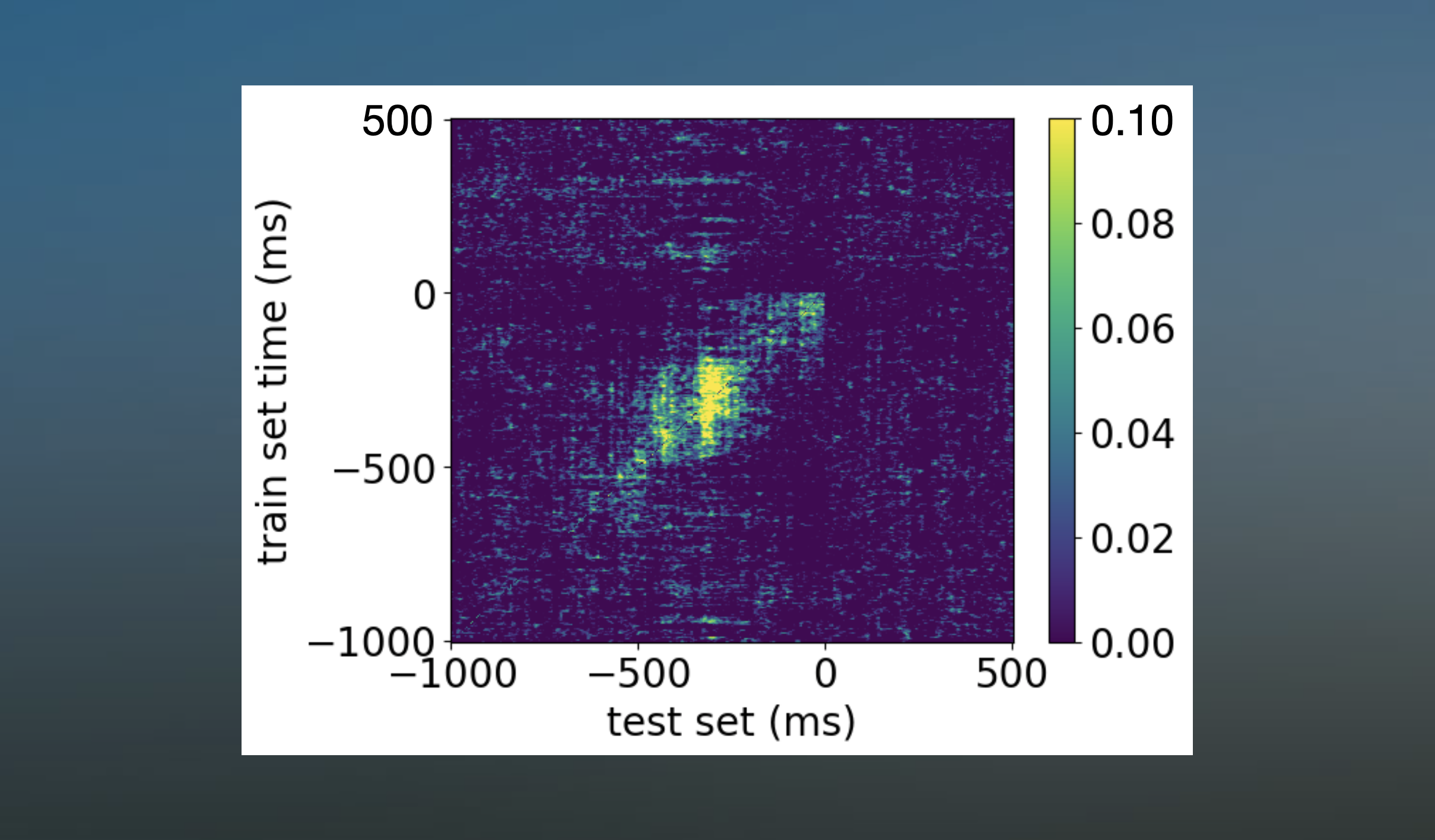

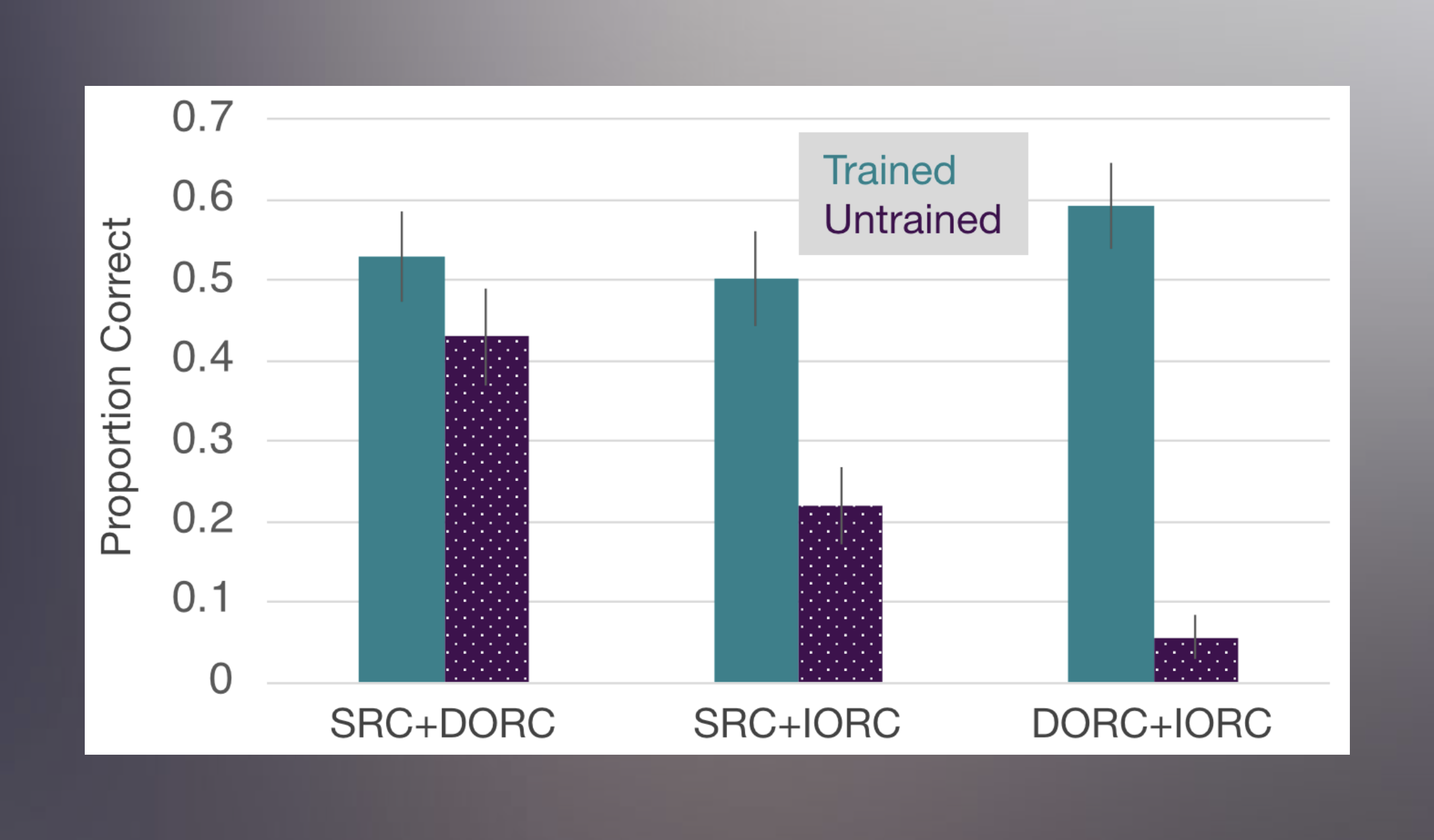

The world's 6000 languages have a surprising number of similarities in their grammatical structures. One common pattern is a contingency hierarchy: if some language has Structure X, then it necessarily has Structure Y. In this project, Vic Ferreira and I taught subjects fake languages with Structure X. Then, we ask them to describe pictures that require them to use Structure Y. Many prominent theories predict that humans can only learn structures we have been exposed to. Contrary to this prediction, participants spontaneously produced Structure Y, even though they had never heard it before.

The world's 6000 languages have a surprising number of similarities in their grammatical structures. One common pattern is a contingency hierarchy: if some language has Structure X, then it necessarily has Structure Y. In this project, Vic Ferreira and I taught subjects fake languages with Structure X. Then, we ask them to describe pictures that require them to use Structure Y. Many prominent theories predict that humans can only learn structures we have been exposed to. Contrary to this prediction, participants spontaneously produced Structure Y, even though they had never heard it before.

In many languages, speakers produce pronouns that no one is sure whether they are grammatical (← case in point). These resumptive pronouns sound bad, but speakers still say them (see my 2018 paper with Matt Wagers). What does this mean for theories of syntax? Along with Aya Meltzer-Asscher, Julie Fadlon, Vic Ferreira, Titus von der Malsburg, Matt Wagers, and Eva Wittenberg, I am working on several projects which converge on the idea that the weird properties of resumptive pronouns result from speakers making do with limited cognitive resources while attempting to produce highly complex and taxing constructions.

In many languages, speakers produce pronouns that no one is sure whether they are grammatical (← case in point). These resumptive pronouns sound bad, but speakers still say them (see my 2018 paper with Matt Wagers). What does this mean for theories of syntax? Along with Aya Meltzer-Asscher, Julie Fadlon, Vic Ferreira, Titus von der Malsburg, Matt Wagers, and Eva Wittenberg, I am working on several projects which converge on the idea that the weird properties of resumptive pronouns result from speakers making do with limited cognitive resources while attempting to produce highly complex and taxing constructions.

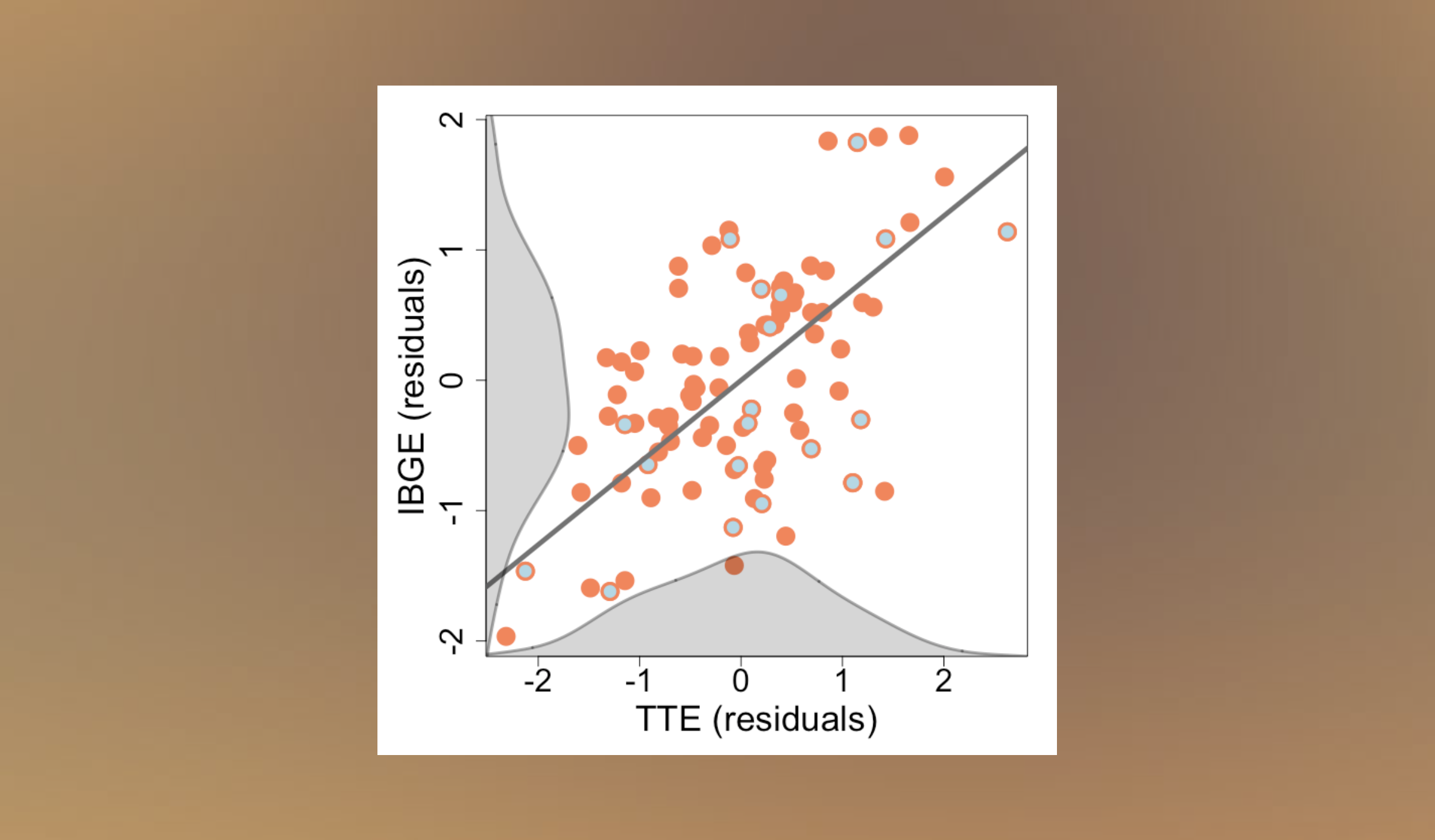

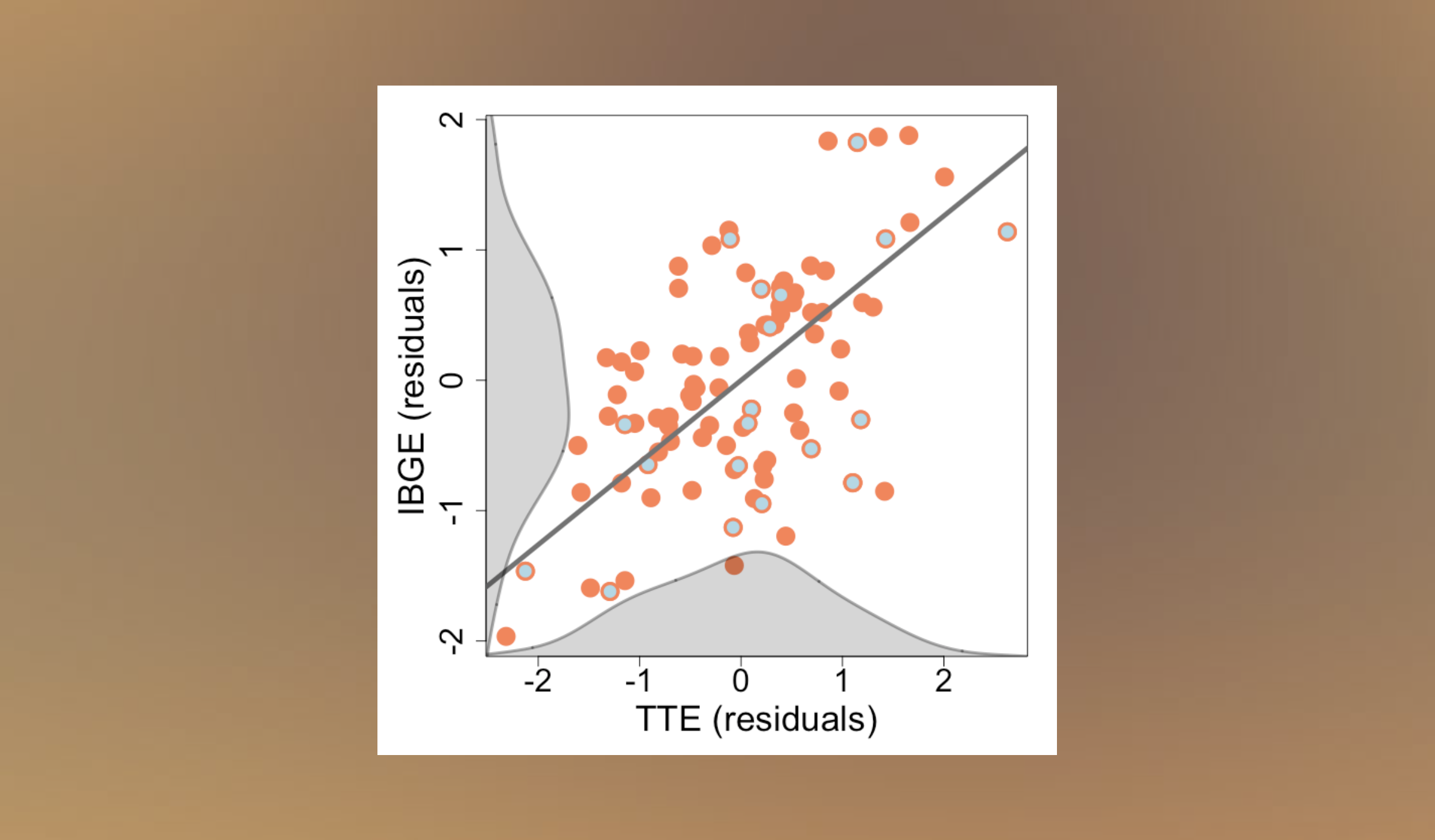

Does every native speaker of English have the same grammar? In this project I demonstrate that there are as many versions of English grammar as there are speakers of English. This finding suggests that the grammar is probably not a set of abstract "rules" derived from experience. Instead, these rules are similar to phonemes: emergent properties of a lifetime of experience.

Does every native speaker of English have the same grammar? In this project I demonstrate that there are as many versions of English grammar as there are speakers of English. This finding suggests that the grammar is probably not a set of abstract "rules" derived from experience. Instead, these rules are similar to phonemes: emergent properties of a lifetime of experience.